Where are you getting all those answers to all those questions you’re asking through ChatGPT or similar tools?

The source is through a foundation model.

So lets take a dive into the world of foundation models……

What is GenAI?

A branch of AI used for generating data. For example, through an application you ask it to write out a explanation about GenAI and it gives you a detailed response.

What is a Model?

A model is the brains of the operation….

….it is trained based on large amounts of data to provide answers to a vast amount of questions using AI and machine learning.

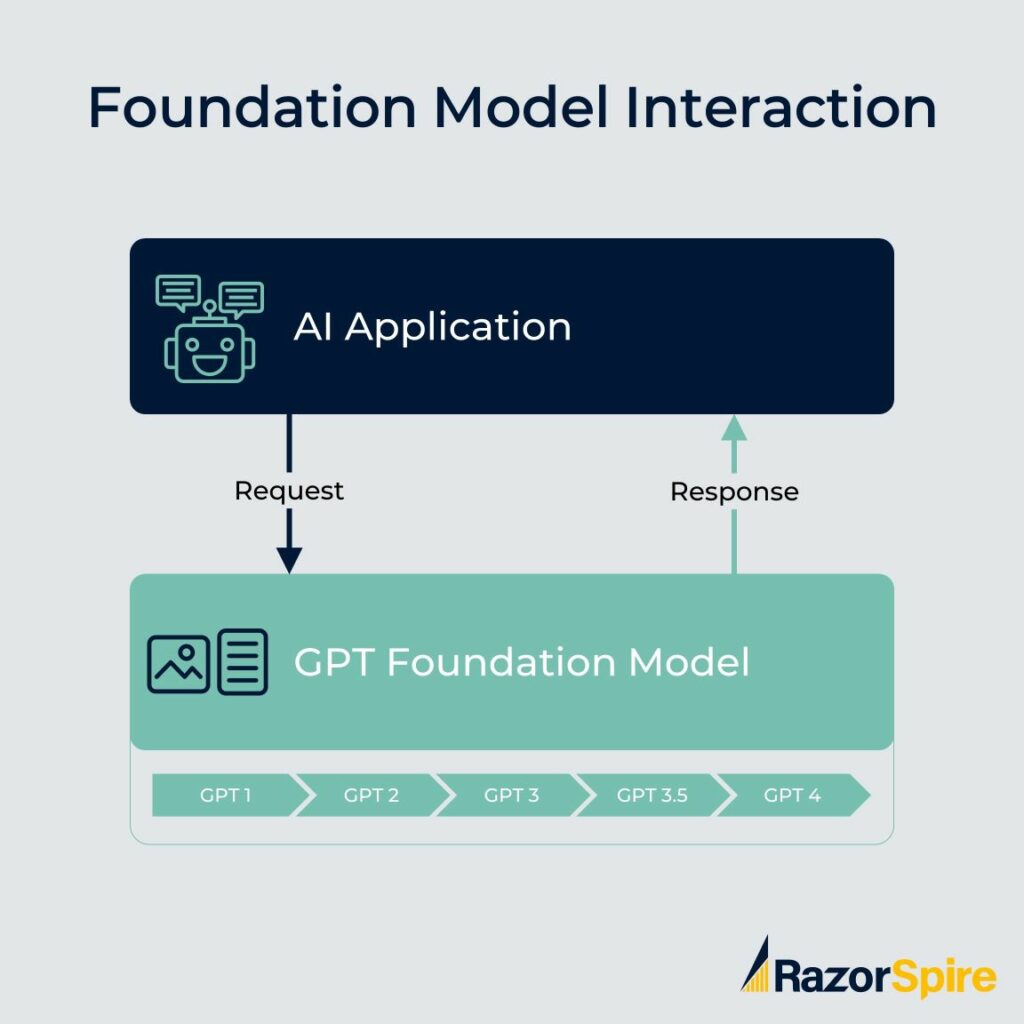

A model on it’s own is no use until you build an application that sits on top of the model to access it.

A small model is built for specialised tasks.

A foundation model is……… read the next question!!!

What is a GenAI Foundation Model?

A foundation model is one that is really broad and is built to handle a vast amount of interrogation.

For example, GPT is a foundation model which ChatGPT was built based on. But many other applications other than ChatGPT are also built based on GPT.

Note: To make it easier to access the model an API (application programming interface) is built. This makes it easy to send requests and get responses back without knowing the details of the model.

Think of it like you’re looking for something in a house….

….Without any knowledge of the house you can walk around it for hours looking for what you want or you can stand outside and ask someone that has detailed knowledge of the house to get you what you want.

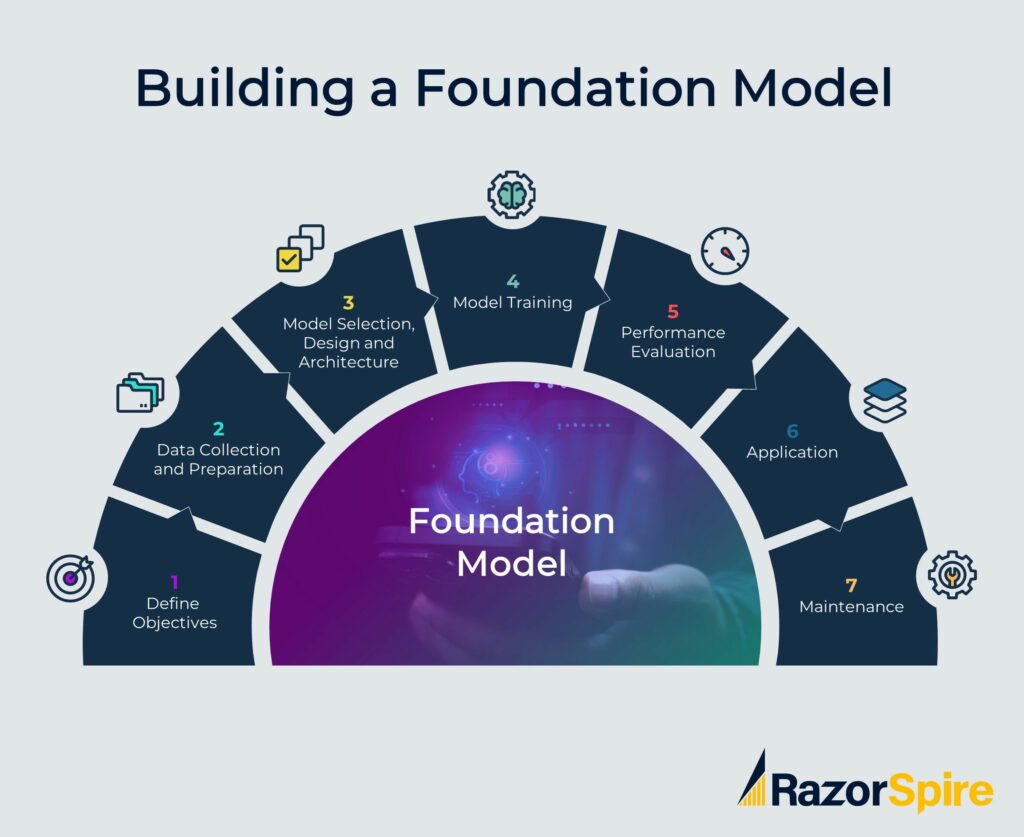

What are the steps involved in building a foundation model?

As you need a lot of money to build a foundation model you may not be about to embark on this expensive journey but it’s useful to know the steps:

1.Define your objectives

Purpose: Understand the task you want it to perform and the type of capabilities required

Scope: Decide if this is a general purpose model or if it’s built for a specific domain (e.g. Medical).

2. Data collection and preparation

You need to gather and process all the data. This is going to be ongoing because your data set will evolve:

- Data Gathering – Gather all the data that you need. When you have that data you’ll need to do some preprocessing.

- Data cleaning – Process data and remove noise, irrelevant information and sensitive or biased data.

- Data Labelling – This adding informative labelling to the data so that a machine learning model can learn from it. Labelling can be done manually, through crowd sourcing or automated.

3. Model selection design and architecture

For a building a model you have 4 options:

- Build one from the ground up – You may have unique requirements where this is no model available.

- Integrate with one through an API – For example can you use GPT (ChatGPT uses this) via an API

- Use an open source one – There are open source models available that you are free to use. You’ll need you own servers to process the data though!

Frameworks for building models

There are frameworks available that will help significantly reduce the time required to deploy models. For example, TensorFlow (from Google) and Pytorch.

4. Training the Model

Imagine you had a bunch of pictures of fruit and you want a computer program (model) to learn to tell is which. You’d show the model the pictures and tell what one each is. The program then looks at each to try to understand it’s characteristics (e.g. size, shape, colour) and each picture is labelled with the correct answer. You are training the model!

5. Performance evaluation

Test the model on various data sets to see how the model performs on various tasks for accuracy, robustness and fairness.

Based on the results make some adjustments.

6. Build applications

Now that you have the model available applications need to be built to allow people to interrogate the model in a user friendly way.

For example. ChatGPT was built to access the GPT model

You have a choice here:

a). Create an API (application programming interface) which allows other people (internal or external groups) to easily interact with the model. The API removes the complexity that would be required to understand the model.

b). If you make the model open source then people are free to use and adapt the model themselves.

c). You can build the applications yourself.

7. Maintenance

A model like any piece of software requires ongoing maintenance. There will always be issues in terms of accuracy of responses, delivering ethical responses etc. So ongoing maintenance of all models is required.

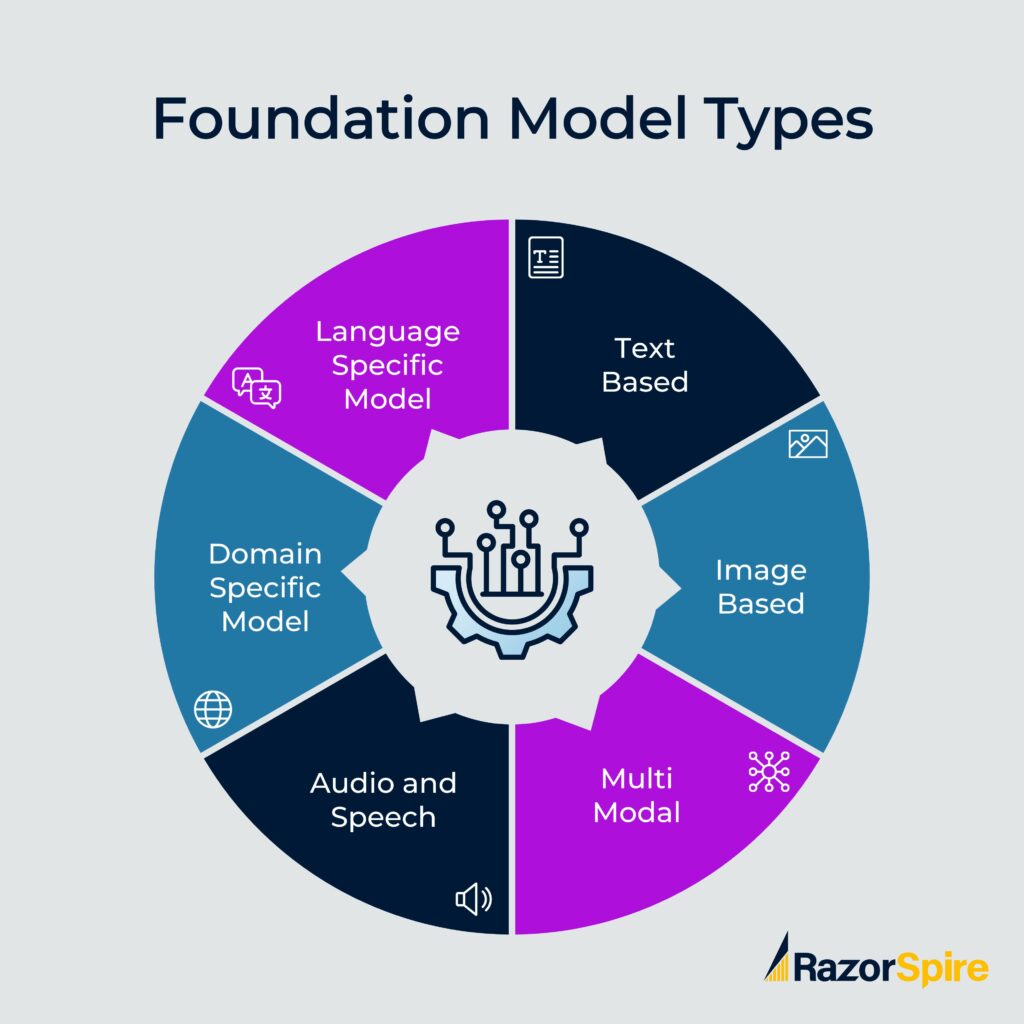

What are the types of foundation models?

When you’re building a model you’re building it for one more more types of operation. For example, if you’re building a model where you ask questions and get text based answers then you’ll want a model that is good at natural language processing.

If you are building a model where you also want to process images you’ll start with a different model type.

Here’s the categorisation based on the types of data they can process:

| Model Type | Example Model | Explanation |

| Text based | GPT | GPT accepts and processes text based responses. ChatGPT is based on GPT. Other example text models include Cohere Command, Claude, PaLM 2. |

| Image based | DALL-E | This processes images and an example of this is Copy.ai which is a tool to help with Marketing copy and it uses DALL-E for images Stable Fusion is another example of an image based model. |

| Multi Modal | GPT-4 | The initial version of GPT was text only but now it can process images. Multi Modal is where you it can be used for more than one type of model type. Google’s Gemini is also multi modal. |

| Audio and Speech | Wavenet | Take audio and convert to speech and vice versa. Google cloud text to speech was based on Wavenet |

| Domain specific model | BioBERT | This was created specific to the medical field. It’s still a foundation model because it’s based on so much data. |

| Language specific model | mBert | This is not a large language model (LLM). This is a model trained on text in multiple languages. It offers specialised understanding for each lanaguage. |

Text based Models

Natural language processing is where you can ask questions the way you’d ask a normal person a question and the model can understand what you are asking and providing conversational responses.

A LLM (large language model) was originally designed for processing and generating text but over time they have become multi model models so they can take imagery as an input and even produce it as an output.

Image Based Models

These models are used to understand and interpret visual data. They can perform image classification, object detection, image generation and more.

Multimodal Models

This is where you are combining multiple modes into one model. For example, a model that can process text and imagery.

Audio and Speech Models

Do I need to explain this one…ha ha!!

These can be used for speech recognition, changing text to speech and even music generation.

Domain Specific Foundation Models

These models are not general purpose but trained on a specific domain but are still foundation models for this domain. For example, you could have a foundation model for legal documents.

Language Specific Foundation Model

For most models you can ask questions and get answers in a variety of languages.

But they may not be built specific to that country or language.

There are foundation models built for a particular country that is trained on all things related to that country e.g. it would understand cultural differences.

What are Some Challenges of Foundation Models?

Infrastructure requirements – They require massive amounts of data processing and this needs to be done quickly. That’s one of the reason NVIDIA’s share price is rocketing because they are providing chips that have advanced capabilities suitable to AI.

Development work – It’s great having a foundation model but you do have to build your stack on top of this model which requires a lot of development work. It’s lucky that AI models can now produce code!!!

Accuracy – You pump in some data and train it but you don’t always get the right results. If your data is not great, your cleaning of this data is not perfect and your model is not good enough then you’ll produce some incorrect results. There’s an expectation that is delivers the right answer all the time but that’s not the case.

Bias – There is potential bias in the data uploaded so you need to train the model well and build a stack that only delivers the most appropriate answers.

Summary

A foundation model is the ‘foundation’ of all things GenAI. The models will evolve and we’ll see a lot of specialised models develop over time. We have large language models now but we’ll have small ones as well. The computing power required for large models is is huge so there will be alternatives.